Aprende en Comunidad

Avalados por :

¡Acceso SAP S/4HANA desde $100!

Acceso a SAPIntegrating Snowflake Database with SAP Data Intelligence: Building Custom Snowflake Operator for Data Loading

- Creado 01/03/2024

- Modificado 01/03/2024

- 130 Vistas

0

Cargando...

While data is a critical asset for modern businesses, the ability of technology to scale has resulted in a flood of big data. Data management and storage has evolved into a necessary component for modern operational processes.

Snowflake, a cloud data warehouse that is lauded for its ability to support multi-cloud infrastructure environments, is one of the most popular data platforms. Snowflake is a data warehouse that runs on top of Amazon Web Services or Microsoft Azure cloud infrastructure, allowing storage and compute to scale independently.

Integrating Snowflake Database with SAP Data Intelligence

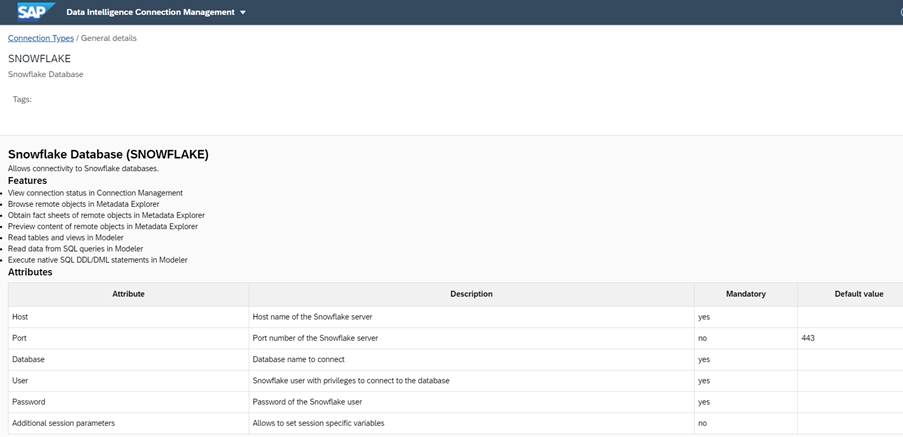

Both SAP DI On-Premises and Cloud version provides connectivity with Snowflake Database.

However, till SAP DI 3.2 On-Premises version there is no inbuilt operator available in DI modeler to support the data load into Snowflake Database.

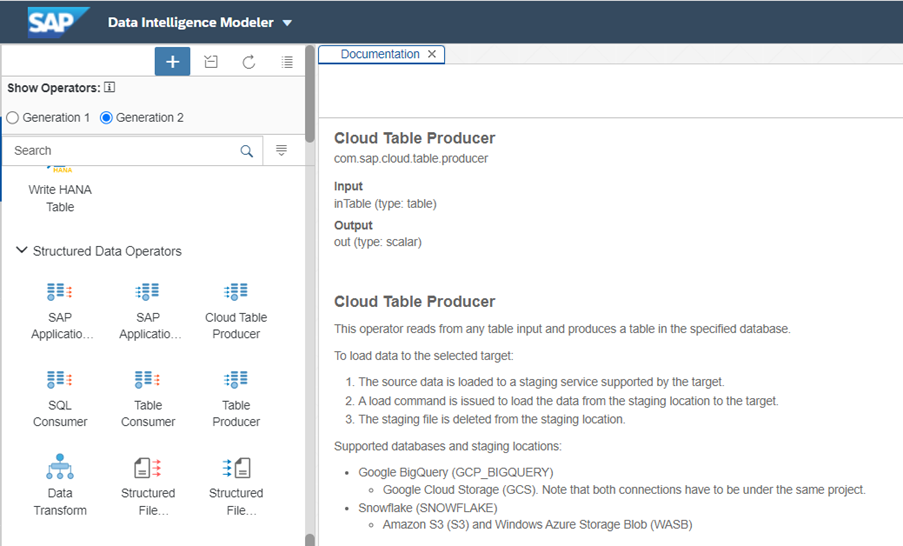

In SAP DI Cloud version there is Generation 2 Cloud Table Producer operator which support data load into Snowflake Database.

There are certain limitations mentioned below with Cloud Table Producer operator:

To Overcome this type of challenges / Requirement, there is option available in SAP Data Intelligence to build a custom Snowflake operator for loading data.

Building Custom Snowflake Operator in SAP Data Intelligence.

To build the custom operator in SAP DI, we must first fulfil a few steps.

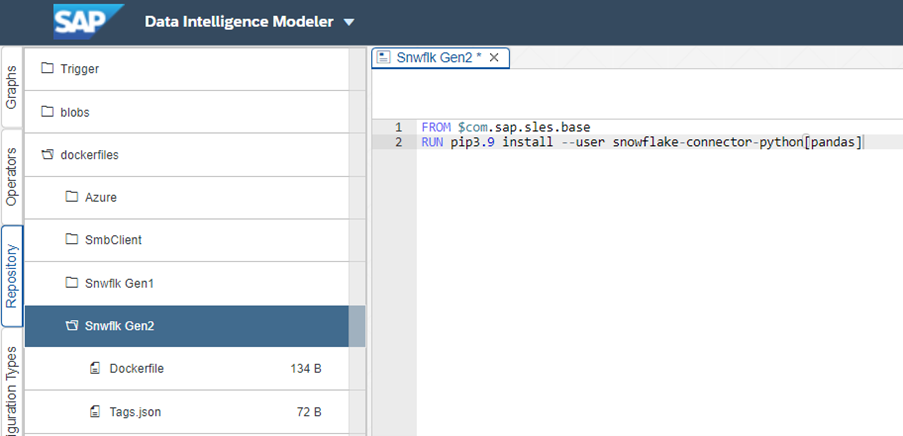

1.Build a docker file in SAP DI to Install a Snowflake python library.

Go to Repository tab and click on create Docker File.

Write the commands as mentioned in screenshot, save it and click on build.

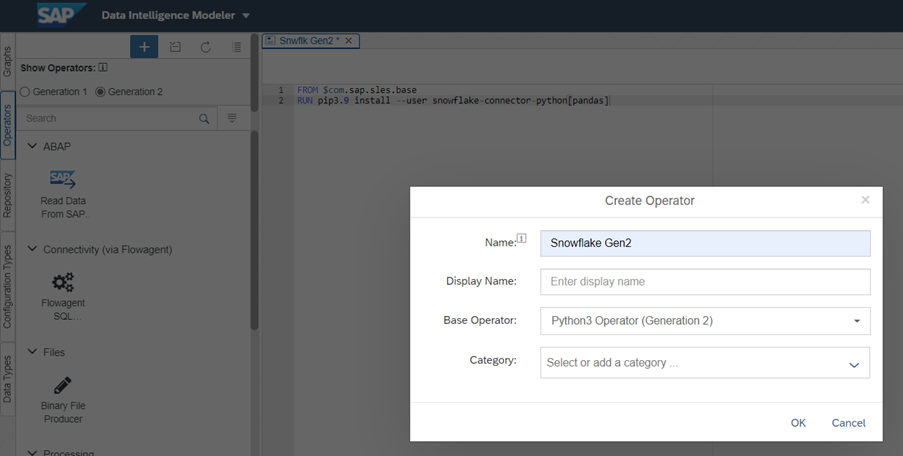

2. Create a new custom operator

Go to Operator tab and select Generation 2 operator. Click on Plus sign to create new operator. Select Base Operator as Python3 (Generation 2)

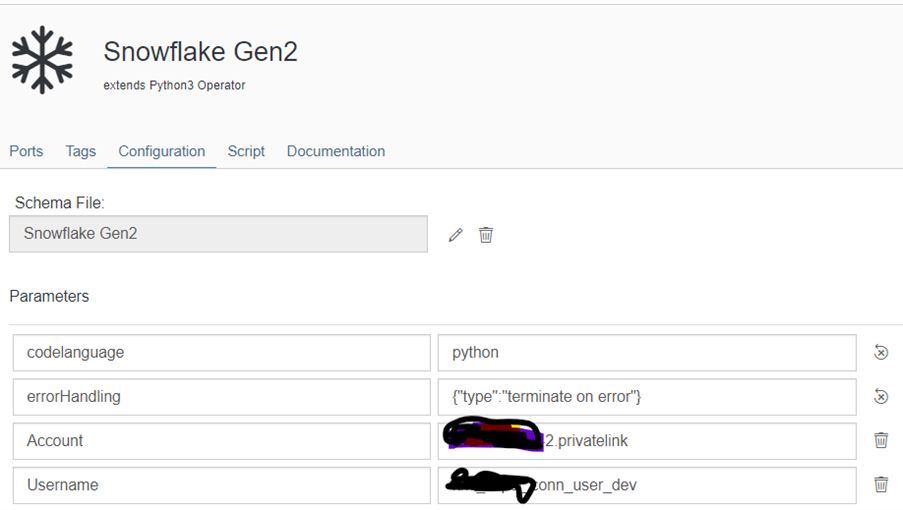

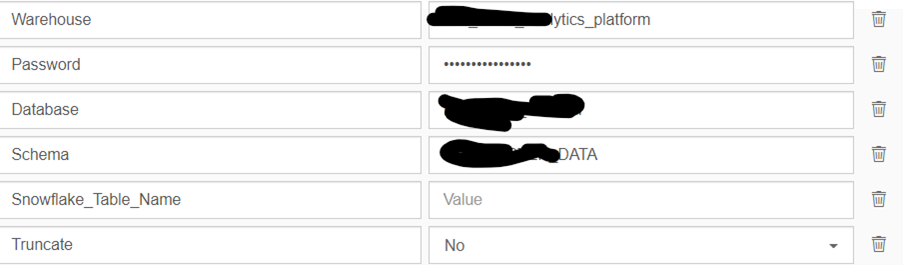

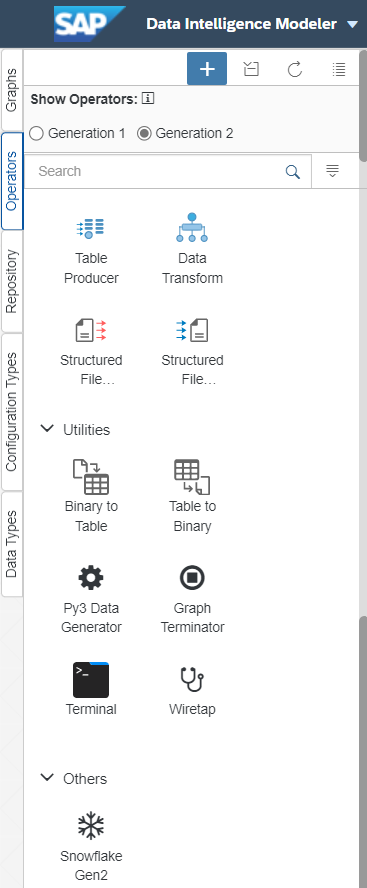

3.Go to configuration tab and define Parameters.

You can create parameters by clicking on edit button and define the details.

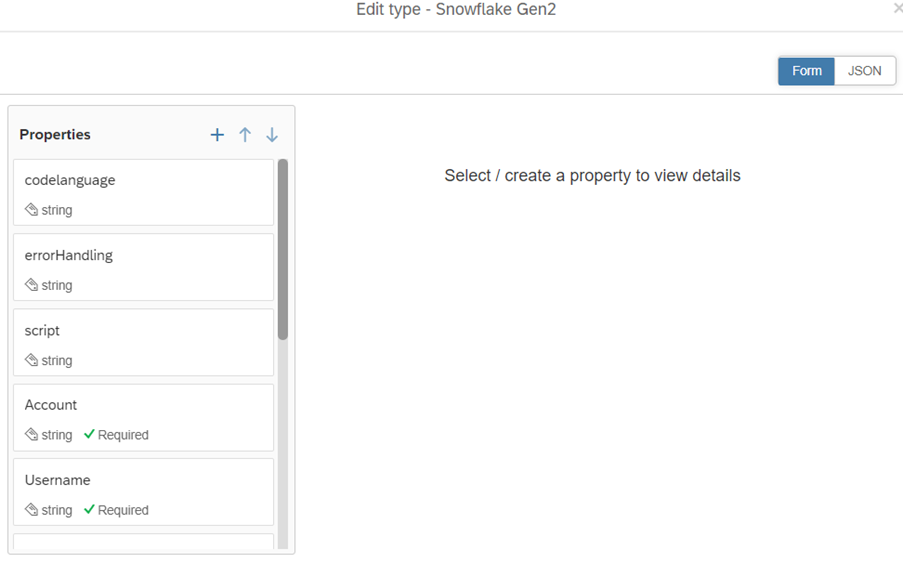

4.Select an operator icon image and save the operator. You can view this custom operator visible under Generation 2 operator tab.

Create a Graph to load the Data from S4H CDS Views into Snowflake Database.

We will create one graph to extract the data from S4 Hana CDS view and load it into Snowflake table using Custom Snowflake Operator.

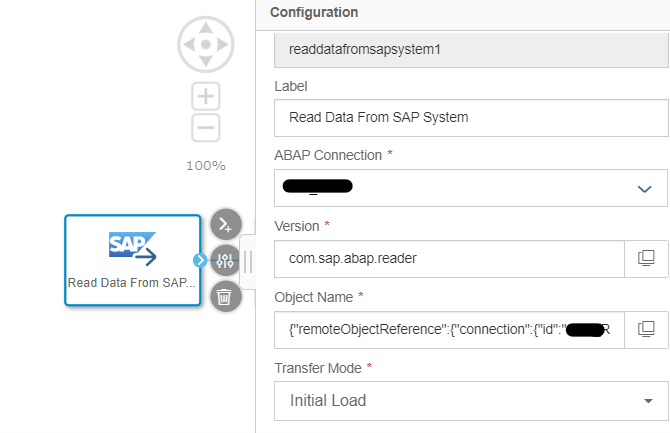

1.Create Gen2 Graph and Drag “Read Data from SAP” Operator in the graph. Here we are assuming that to connect with S4 Hana CDS view , we have created ABAP RFC Connection type.

Select the Connection, Object Name and Transfer Mode in operator configuration.

2.Drag the Data Transform operator in the graph and connect it with Read Data from SAP Operator, define the output columns mapping in the transformation operator.

Snowflake, a cloud data warehouse that is lauded for its ability to support multi-cloud infrastructure environments, is one of the most popular data platforms. Snowflake is a data warehouse that runs on top of Amazon Web Services or Microsoft Azure cloud infrastructure, allowing storage and compute to scale independently.

Integrating Snowflake Database with SAP Data Intelligence

Both SAP DI On-Premises and Cloud version provides connectivity with Snowflake Database.

However, till SAP DI 3.2 On-Premises version there is no inbuilt operator available in DI modeler to support the data load into Snowflake Database.

In SAP DI Cloud version there is Generation 2 Cloud Table Producer operator which support data load into Snowflake Database.

There are certain limitations mentioned below with Cloud Table Producer operator:

- To load the data into Snowflake, we must use one of the staging locations (Amazon S3 or WASB). You cannot use this operator if your current landscape does not include any of this cloud storage.

- We cannot customize this operator as per the requirements, for example if we want to perform UPSERT in snowflake then this operator doesn’t support this mode.

- This operator is only compatible with GEN2 operators, so we have to design the pipelines accordingly.

- Prevent record loss due to graph failure. Assume that while loading data into Snowflake, the graph/pipeline get fails due to an error and some records are not loaded. How will you deal with the failed records?

To Overcome this type of challenges / Requirement, there is option available in SAP Data Intelligence to build a custom Snowflake operator for loading data.

Building Custom Snowflake Operator in SAP Data Intelligence.

To build the custom operator in SAP DI, we must first fulfil a few steps.

1.Build a docker file in SAP DI to Install a Snowflake python library.

Go to Repository tab and click on create Docker File.

Write the commands as mentioned in screenshot, save it and click on build.

2. Create a new custom operator

Go to Operator tab and select Generation 2 operator. Click on Plus sign to create new operator. Select Base Operator as Python3 (Generation 2)

3.Go to configuration tab and define Parameters.

You can create parameters by clicking on edit button and define the details.

4.Select an operator icon image and save the operator. You can view this custom operator visible under Generation 2 operator tab.

Create a Graph to load the Data from S4H CDS Views into Snowflake Database.

We will create one graph to extract the data from S4 Hana CDS view and load it into Snowflake table using Custom Snowflake Operator.

1.Create Gen2 Graph and Drag “Read Data from SAP” Operator in the graph. Here we are assuming that to connect with S4 Hana CDS view , we have created ABAP RFC Connection type.

Select the Connection, Object Name and Transfer Mode in operator configuration.

2.Drag the Data Transform operator in the graph and connect it with Read Data from SAP Operator, define the output columns mapping in the transformation operator.

Pedro Pascal

Se unió el 07/03/2018

Facebook

Twitter

Pinterest

Telegram

Linkedin

Whatsapp

Sin respuestas

No hay respuestas para mostrar

Se el primero en responder

No hay respuestas para mostrar

Se el primero en responder

© 2026 Copyright. Todos los derechos reservados.

Desarrollado por Prime Institute

Hola ¿Puedo ayudarte?